by Robyn Bolton | Feb 8, 2026 | AI, Leadership, Leading Through Uncertainty, Strategic Foresight, Strategy

In 2023, Klarna’s CEO proudly announced it had replaced 700 customer service workers with AI and that the chatbot was handling two-thirds of customer queries. Labor costs dropped and victory was declared.

By 2025, Klarna was rehiring. Customer satisfaction had tanked. The CEO admitted they “went too far,” focusing on efficiency over quality.

Like Captain Robert Scott, Klarna misjudged the circumstance it was in, applied the wrong playbook, and lost. It thought it had facts but all it has was technical specs. It made tons of assumptions about chatbots’ ability to replace human judgment and how customers would respond.

Calibrated Decision Design, a process for diagnosing your circumstances before picking a playbook, consistently proves to be a quick and necessary step to ensure success.

When you have the facts and need results ASAP: Go NOW!

General Mills, like its competitors, had been digitizing its supply chain for years and so facts based on experience and a list of the facts it needed.

To close the gap and achieve end-to-end visibility in its supply chain, it worked with Palantir to develop a digital twin of its entire supply chain. Results: 30% waste reduction, $300 million in savings, decisions that took weeks now takes hours. It proves that you don’t need all the answers to make a move, but you need to know more than you don’t.

When you have hypotheses but can’t wait for results: Discovery Planning

Morgan Stanley Wealth Management’s (MSWM) clients expect advisors to bring them bespoke advice based on mountains of analysis, and insights. But it’s impossible for any advisor to process all that data. Confident that AI could help but uncertain whether its would improve relationships or create friction, MSWM partnered with OpenAI.

Within six months, they debuted a GenAI chatbot to help Financial Advisors quickly access the firm’s IP. Document retrieval jumped from 20% to 80% and 98% now use it daily. Two years later, MSWM expanded into a meeting summary tool to summarize meetings into actionable outputs and update the CRM with notes and follow-ups. A perfect example of how a series of experiments leads to a series of successes.

When you have facts and time to achieve results: Patient Planning

Drug discovery requires patience and, while the process may be predictable, the results aren’t. That’s why pharma companies need strategies that are thoughtfully planned as they are responsive.

Lilly is doing just that by investing in its own capabilities and building an ecosystem of partners. It started by launching TuneLab, a platform offering access to AI-enabled drug discovery models based on data that Lilly spent over $1 billion developing. A month later, the pharma giant announced a partnership with NVIDIA to build the pharmaceutical industry’s most powerful AI supercomputer. Two months later, it committed over $6 billion to a new manufacturing facility in Alabama. These aren’t billion-dollar bets, they’re thoughtful investments in a long-term future that allows Lilly to learn now and stay flexible as needs and technology evolve.

When you’re making assumptions and have time to learn: Resilient Strategy

There’s no way of knowing what the global energy system will look like in 40 years. That’s why Shell’s latest scenario planning efforts resulted in three distinct scenarios, Surge, Archipelagos, and Horizon. Multiple scenarios allows the company to “explore trade-offs between energy security, economic growth and addressing carbon emissions” and build resilient strategies to recognize which one is unfolding and pivot before competitors even spot what’s happening.

Stop benchmarking. Start diagnosing.

It’s easy to feel like you’re behind when it comes to AI. But the rush to act before you know the problem and the circumstances is far more likely to make you a cautionary tale than a poster child for success.

So, stop benchmarking what competitors do and start diagnosing the circumstances you’re in, so you use the playbook you need.

by Robyn Bolton | Feb 2, 2026 | AI, Leadership, Strategic Foresight, Strategy

It was a race. And the whole world was watching.

In 1911, Captain Robert Scott set out to reach the South Pole. He’d been to Antarctica before and because of his past success, he had more funding, more expertise, and more experience. He had all the equipment needed.

Racing him to fame, fortune and glory was Norwegian Roald Amundsen. Originally heading to the North Pole, he turned around when he learned that Robert Peary had beaten him there. He had dogs and skis, equipment perfect for the Arctic but unproven in Antarctica.

Amundsen won the race, by over a month.

Scott and his crew died 11 miles from the South Pole.

When the Playbook Stops Working

Scott wasn’t guessing. He’d tested motor sledges in the Alps. He’d seen ponies work on a previous Antarctic expedition. He built a plan around the best available equipment and the general playbook that had served British expeditions for decades: horses and motors move heavy loads, so use horses and motors.

It just wasn’t right for Antarctica. The motors broke down in the cold. The ponies sank through the ice. The plan that looked solid on paper fell apart the moment it met the actual environment it had to operate in.

The same thing is happening today with AI.

For decades, when new technologies emerge, executives have followed a similarly familiar playbook: assess the opportunity, build a business case, plan the rollout, execute.

And for decades it worked. Cloud migrations and ERP implementations were architectural changes to known processes with predictable outcomes. As time went on, information grew more solid, timelines became better understood, and the playbook solidified.

AI is different. Executives are so focused on picking the right AI tools and building the right infrastructure that they aren’t thinking about what happens when they hit the ice. Even if the technology works as designed, you have no idea whether it will deliver the intended results or create a ripple of unintended consequences that paralyze your business and put egg on your face.

Diagnose Before You Prescribe

The circumstances of AI are different too, and that requires a new playbook. Make that playbooks. Picking the right playbook requires something my clients and I call Calibrated Decision Design.

We start by asking how long it will take to realize the ultimate goals of the investment. Do we need to break even this year, or is this a multi-year bet where results slowly roll in? Most teams have a sense of this, so it allows us to move quickly to the next, much harder question.

What do we know and what do we believe? This is where most teams and AI implementations fail. To seem confident and indispensable, people present hypotheses as if they are facts resulting in decisions based on a single data points or best guesses. The result is a confident decision destined to crumble.

Where you land on these two axes determines your playbook. Apply the wrong one and you’ll either waste money on over-analysis or burn through budget on premature action.

Pick from the Four Playbooks

Go NOW!: You have the facts and need results now. Stop deliberating. Execute.

Predictable Planning: You have confidence in the outcome, but the payoff takes patience. Build a flexible strategy and operational plan to stay responsive as things progress.

Discovery Planning: You need results fast, but you don’t have proof your plan will work. Run small, fast experiments before scaling anything.

Resilient Strategy: The time horizon is long and you’re short on facts. The worst thing you can do is go all in. Instead, envision multiple futures, identify early warning signs, find commonalities and prepare a strategy that can pivot.

Apply it

Which playbook are you using and which one is best for your circumstance?

by Robyn Bolton | Jan 25, 2026 | AI, Leadership, Leading Through Uncertainty

Spain, 1896

At the tender age of 14, Pablo Ruiz Picasso painted a portrait of his Aunt Pepa a work of brilliant academic realism that would go on to be hailed as “without a doubt one of the greatest in the whole history of Spanish painting.”

In 1901, he abandoned his mastery of realism, painting only in shades blue and blue-green.

There’s debate over why Picasso’s Blue Period began. Some argue that it’s a reflection of the poverty and desperation he experienced as a starving artist in Paris. Others claim it was a response to the suicide of his friend, Carles Casagemas. But Bill Gurley, a longtime venture capitalist, has a different theory.

Picasso abandoned realism because of the Kodak Brownie.

Introduced on February 1, 1900, the Kodak Brownie made photography widely available, fulfilling George Eastman’s promise that “you press the button, we do the rest.”

An ocean away, Gurley argues, Picasso’s “move toward abstraction wasn’t a rejection of skill; it was a recognition that realism had stopped being the frontier….So Picasso moved on, not because realism was wrong, but because it was finished.”

Washington DC, 2004

Three years before Drive took the world by storm, Daniel Pink published his third book, A Whole New Mind: Why Right-Brainers Will Rule the Future.

In it, he argues that a combination of technological advancements, higher standards of living, and access to cheaper labor are pushing us from a world that values left brain skills like linear thought, analysis, and optimization towards one that requires right brain skills like artistry, empathy, and big picture thinking.

As a result, those who succeed in the future will be able to think like designers, tell stories with context and emotional impact, and combine disparate pieces into a whole greater than the sum of its parts. Leaders will need to be empathetic, able to create “a pathway to more intense creativity and inspiration,” and guide others in the pursuit of meaning and significance.

California, 2026

Barry O’Reilly, author of Unlearn, published his monthly blog post, “Six Counterintuitive Trends to Think about for 2026,” in which he outlines what he believes will be the human reactions to a world in which AI is everywhere.

Leadership, he asserts, will cease to be measured by the resources we control (and how well we control them to extract maximum value) but by judgment. Specifically, a leader’s ability to:

- Ask better questions

- Frame decisions clearly

- Hold ambiguity without freezing

- Know when not to use AI

The Price of Safety vs the Promise of Greatness

Picasso walked away from a thriving and lucrative market where he was an emerging star to suffer the poverty, uncertainty, and desperation of finding what was next. It would take more than a decade for him to find international acclaim. He would spend the rest of his life as the most famous and financially successful artist in the world.

Are you willing to take that same risk?

You can cling to the safety of what you know, the markets, industries, business models, structures, incentives that have always worked. You can continue to demand immediate efficiency, obedience, and profit while experimenting with new tech and playing with creative ideas.

Or you can start to build what’s next. You don’t have to abandon what works, just as Picasso didn’t abandon paint. But you do have to start using your resources in new ways. You must build the characteristics and capabilities that Daniel Pink outlines. You must become the “counterintuitive” leader that embraces ambiguity, role models critical thinking, and rewards creativity and risk-taking.

Do you have the courage to be counterintuitive?

Are you willing to embrace your inner Picasso?

by Robyn Bolton | Jan 17, 2026 | AI, Leadership, Leading Through Uncertainty, Stories & Examples

You’ve clarified the vision and strategy. Laid out the priorities and simplified the message. Held town halls, answered questions, and addressed concerns. Yet the AI initiative is stalled in ‘pilot mode,’ your team is focused solely on this quarter’s numbers, and real change feels impossible. You’re starting to suspect this isn’t a “change management” problem.

You’re right. It’s not.

The Data You’re Not Seeing

You’ve been doing what the research tells you to do: communicate clearly and frequently, clarify decision rights, and reduce change overload. And these things worked. Until employees went from grappling with two to 10 planned change initiatives in a single year. As the number went up, willingness to support organization change crashed, falling from 74% of employees in 2016 to 43% in 2022.

But here’s what the research isn’t telling you: despite your organizational fixes, your people are terrified. 77% of workers fear they’ll lose their jobs to AI in the next year. 70% fear they’ll be exposed as incompetent. And 66% of consumers, the highest level in a decade, expect unemployment to continue to rise.

Why doesn’t the research focus on fear? Because it’s uncomfortable. Messy. It’s a people (Behavior) problem, not a process (Architecture) problem and, as a result, you can’t fix it with a new org chart or better meeting cadence.

The organizational fixes are necessary. They’re just not sufficient to give people the psychological reassurance, resilience, and tools required to navigate an environment in which change is exponential, existential, and constant.

What Actually Works

In 2014, Microsoft was toxic and employees were afraid. Stack ranking meant every conversation was a competition, every mistake was career-limiting, and every decision was a chance to lose status. The company was dying not from bad strategy, but from fear.

CEO Satya Nadella didn’t follow the old change management playbook. He did more:

First, he eliminated the structures that created fear, including the stack ranking system, the zero-sum performance reviews, the incentives that punished mistakes. These were Architecture fixes, and they mattered.

And he addressed the messy, uncomfortable emotions that drove Behavior and Culture. He role modeled the Behaviors required to make it psychologically safe to be wrong. He introduced the “growth mindset” not as a poster on the wall, but as explicit permission to not have all the answers. When he made a public gaffe about gender equality, he immediately emailed all 200,000 employees: “My answer was very bad.” No spin. No excuses. Just modeling the vulnerability that he expected from everyone.

Ten years later, Microsoft is worth $2.5 trillion. Employee engagement and morale are dramatically improved because Nadella addressed the structures that fed fear AND the fear itself.

What This Means for You

You don’t need to be Satya Nadella. But you do need to stop pretending fear doesn’t exist in your organization.

Name it early and often. Not just in the all-hands meeting, but in the team meetings and lunch-and-learns. Be honest, “Some roles will change with this AI implementation. Here’s what we know and don’t know.” Make the implicit explicit.

Eliminate the structures that create fear. If your performance system pits people against each other, change it. If people get punished for taking smart risks, stop. If people ask questions or make suggestions, listen and act.

Be vulnerable. Share what you’re uncertain about. Admit when you don’t know. Show that it’s safe to be learning. Demonstrate that learning is better than (pretending to) know.

The stakes aren’t abstract: That AI pilot stuck in testing. The strategic initiative that gets compliance but not commitment. The team so focused on surviving today they can’t prepare for tomorrow. These aren’t communication failures. They’re misaligned ABCs that allow fear to masquerade as pragmatism.

And the masquerade only stops when you align align the ABCs all at once. Because fixing Architecture without changing your Behavior simply gives fear a new place to hide.

by Robyn Bolton | Dec 2, 2025 | AI

“It just popped up one day. Who knows how long they worked on it or how many of millions were spent. They told us to think of it as ChatGPT but trained on everything our company has ever done so we can ask it anything and get an answer immediately.”

The words my client was using to describe her company’s new AI Chatbot made it sound like a miracle. Her tone said something else completely.

“It sounds helpful,” I offered. “Have you tried it?”

“I’m not training my replacement! And I’m not going to train my R&D, Supply Chain, Customer Insights, or Finance colleagues’ replacements either. And I’m not alone. I don’t think anyone’s using it because the company just announced they’re tracking usage and, if we don’t use it daily, that will be reflected in our performance reviews.”

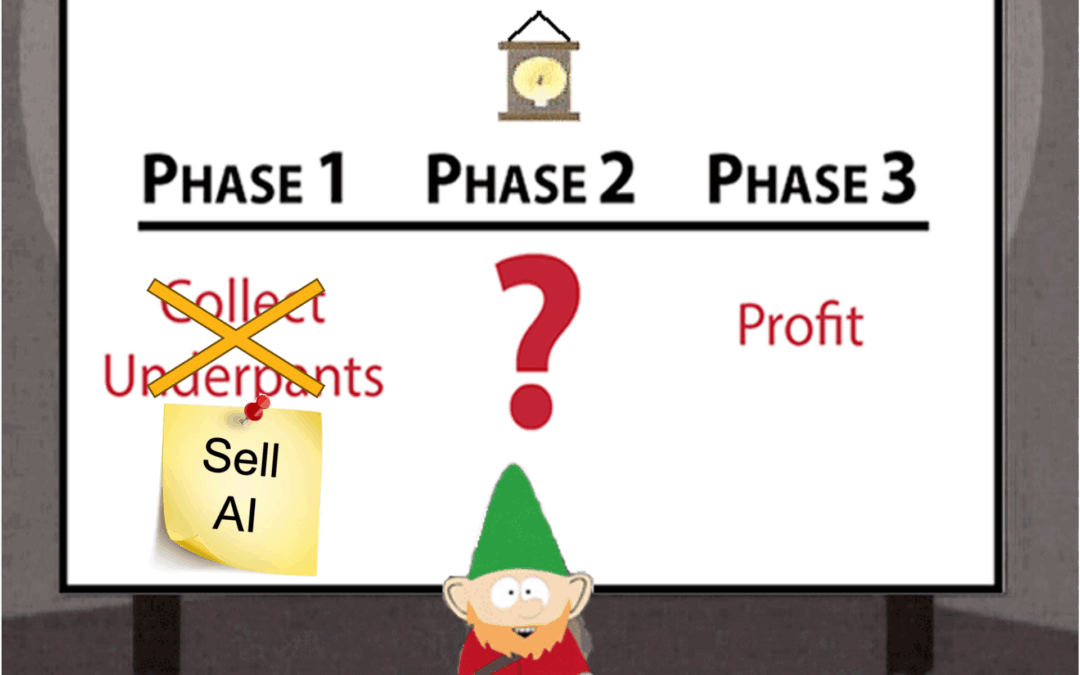

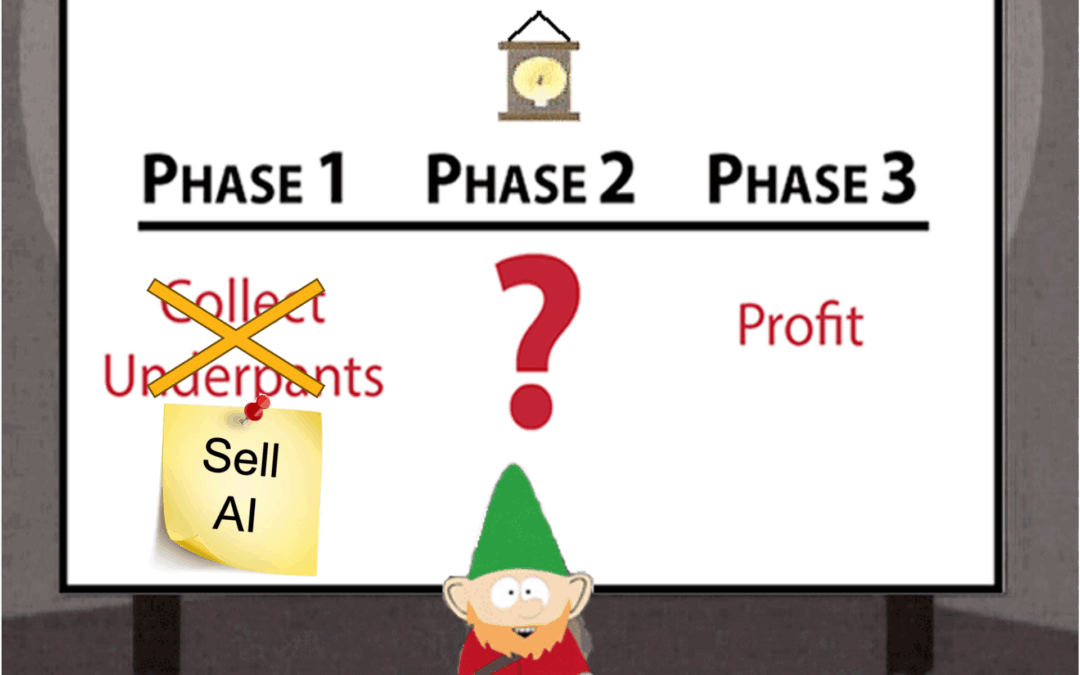

All I could do was sigh. The Underpants Gnomes have struck again.

Who are the Underpants Gnomes?

The Underpants Gnomes are the stars of a 1998 South Park episode described by media critic Paul Cantor as, “the most fully developed defense of capitalism ever produced.”

Claiming to be business experts, the Underpants Gnomes sneak into South Park residents’ homes every night and steal their underpants. When confronted by the boy in their underground lair, the Gnomes explain their business plan:

- Collect underpants

- ?

- Profit

It was meant as satire.

Some took it as a an abbreviated MBA.

How to Spot the Underpants AI Gnomes

As the AI hype grows, fueling executive FOMO (Fear of Missing Out), the Underpants Gnomes, cleverly disguised as experts, entrepreneurs and consultants, saw their opportunity.

- Sell AI

- ?

- Profit

While they’ve pivoted their business focus, they haven’t improved their operations so the Underpants AI Gnomes as still easy to spot:

- Investment without Intention: Is your company investing in AI because it’s “essential to future-proofing the business?” That sounds good but if your company can’t explain the future it’s proofing itself against and how AI builds a moat or a life preserver in that future, it’s a sign that the Gnomes are in the building.

- Switches, not Solutions: If your company thinks that AI adoption is as “easy as turning on Copilot” or “installing a custom GPT chatbot, the Gnomes are gaining traction. AI is a tool and you need to teach people how to use tools, build processes to support the change, and demonstrate the benefit.

- Activity without Achievement: When MIT published research indicating that 95% of corporate Gen AI pilots were failing, it was a sign of just how deeply the Gnomes have infiltrated companies. Experiments are essential at the start of any new venture but only useful if they generate replicable and scalable learning.

How to defend against the AI Gnomes

Odds are the gnomes are already in your company. But fear not, you can still turn “Phase 2:?” into something that actually leads to “Phase 3: Profit.”

- Start with the end in mind: Be specific about the outcome you are trying to achieve. The answer should be agnostic of AI and tied to business goals.

- Design with people at the center: Achieving your desired outcomes requires rethinking and redesigning existing processes. Strategic creativity like that requires combining people, processes, and technology to achieve and embed.

- Develop with discipline: Just because you can (run a pilot, sign up for a free trial), doesn’t mean you should. Small-scale experiments require the same degree of discipline as multi-million-dollar digital transformations. So, if you can’t articulate what you need to learn and how it contributes to the bigger goal, move on.

AI, in all its forms, is here to stay. But the same doesn’t have to be true for the AI Gnomes.

Have you spotted the Gnomes in your company?

by Robyn Bolton | Aug 20, 2025 | AI, Metrics

Sometimes, you see a headline and just have to shake your head. Sometimes, you see a bunch of headlines and need to scream into a pillow. This week’s headlines on AI ROI were the latter:

- Companies are Pouring Billions Into A.I. It Has Yet to Pay Off – NYT

- MIT report: 95% of generative AI pilots at companies are failing – Forbes

- Nearly 8 in 10 companies report using gen AI – yet just as many report no significant bottom-line impact – McKinsey

AI has slipped into what Gartner calls the Trough of Disillusionment. But, for people working on pilots, it might as well be the Pit of Despair because executives are beginning to declare AI a fad and deny ever having fallen victim to its siren song.

Because they’re listening to the NYT, Forbes, and McKinsey.

And they’re wrong.

ROI Reality Check

In 20205, private investment in generative AI is expected to increase 94% to an estimated $62 billion. When you’re throwing that kind of money around, it’s natural to expect ROI ASAP.

But is it realistic?

Let’s assume Gen AI “started” (became sufficiently available to set buyer expectations and warrant allocating resources to) in late 2022/early 2023. That means that we’re expecting ROI within 2 years.

That’s not realistic. It’s delusional.

ERP systems “started” in the early 1990s, yet providers like SAP still recommend five-year ROI timeframes. Cloud Computing“started” in the early 2000s, and yet, in 2025, “48% of CEOs lack confidence in their ability to measure cloud ROI.” CRM systems’ claims of 1-3 years to ROI must be considered in the context of their 50-70% implementation failure rate.

That’s not to say we shouldn’t expect rapid results. We just need to set realistic expectations around results and timing.

Measure ROI by Speed and Magnitude of Learning

In the early days of any new technology or initiative, we don’t know what we don’t know. It takes time to experiment and learn our way to meaningful and sustainable financial ROI. And the learnings are coming fast and furious:

Trust, not tech, is your biggest challenge: MIT research across 9,000+ workers shows automation success depends more on whether your team feels valued and believes you’re invested in their growth than which AI platform you choose.

Workers who experience AI’s benefits first-hand are more likely to champion automation than those told, “trust us, you’ll love it.” Job satisfaction emerged as the second strongest indicator of technology acceptance, followed by feeling valued. If you don’t invest in earning your people’s trust, don’t invest in shiny new tech.

More users don’t lead to more impact: Companies assume that making AI available to everyone guarantees ROI. Yet of the 70% of Fortune 500 companies deploying Microsoft 365 Copilot and similar “horizontal” tools (enterprise-wide copilots and chatbots), none have seen any financial impact.

The opposite approach of deploying “vertical” function-specific tools doesn’t fare much better. In fact, less than 10% make it past the pilot stage, despite having higher potential for economic impact.

Better results require reinvention, not optimization: McKinsey found that call centers that gave agents access to passive AI tools for finding articles, summarizing tickets, and drafting emails resulted in only a 5-10% call time reduction. Centers using AI tools to automate tasks without agent initiation reduced call time by 20-40%.

Centers reinventing processes around AI agents? 60-90% reduction in call time, with 80% automatically resolved.

How to Climb Out of the Pit

Make no mistake, despite these learnings, we are in the pit of AI despair. 42% of companies are abandoning their AI initiatives. That’s up from 17% just a year ago.

But we can escape if we set the right expectations and measure ROI on learning speed and quality.

Because the real concern isn’t AI’s lack of ROI today. It’s whether you’re willing to invest in the learning process long enough to be successful tomorrow.